Getting Started with Snowflake Data Exchange

Get Live Data from Snowflake Data Exchange

Setup a new account:

If you already have access to a Snowflake account,

congratulations! You can skip these steps. If not,

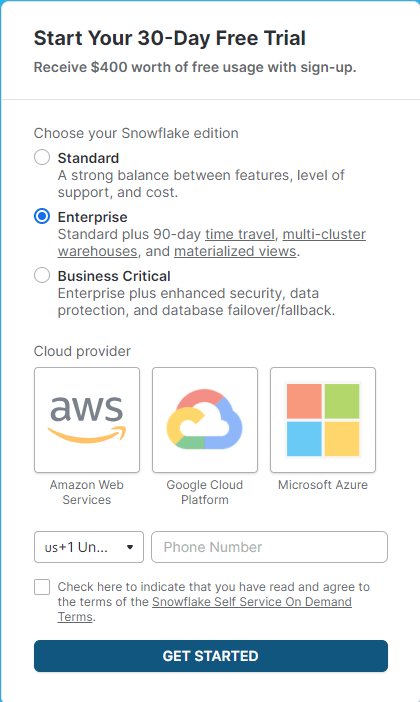

go to trial.snowflake.com. On the below page, indicate your edition (Enterprise is the correct choice for 95% of applications) and your chosen cloud. Within minutes,

you will receive an email with your account id. It looks something like XX1234-us-west.azure.snowflake.com. When you activate the link, create your account admin username and password,

and you are ready to go.

Once your account is setup, on the worksheet tab, my recommendation is to immediately execute the following code:

CREATE WAREHOUSE MY_COMPUTE_WAREHOUSE;

ALTER WAREHOUSE MY_COMPUTE_WAREHOUSE SET WAREHOUSE_SIZE = "X-Small", AUTO_SUSPEND = 60;

This will create a brand new compute warehouse and set it to the smallest size with the minimum auto-off (after 60 seconds). Unless you are dealing with huge data sets, for the type of investigation we will be doing these settings will ensure you get the most of your $400 in free credit (and if you stick to an extra-small warehouse you will easily get a full month of querying in!)

Connect and Search the Data Exchange

From your main page, just hit the marketplace logo and begin to search through all the data providers that are available.

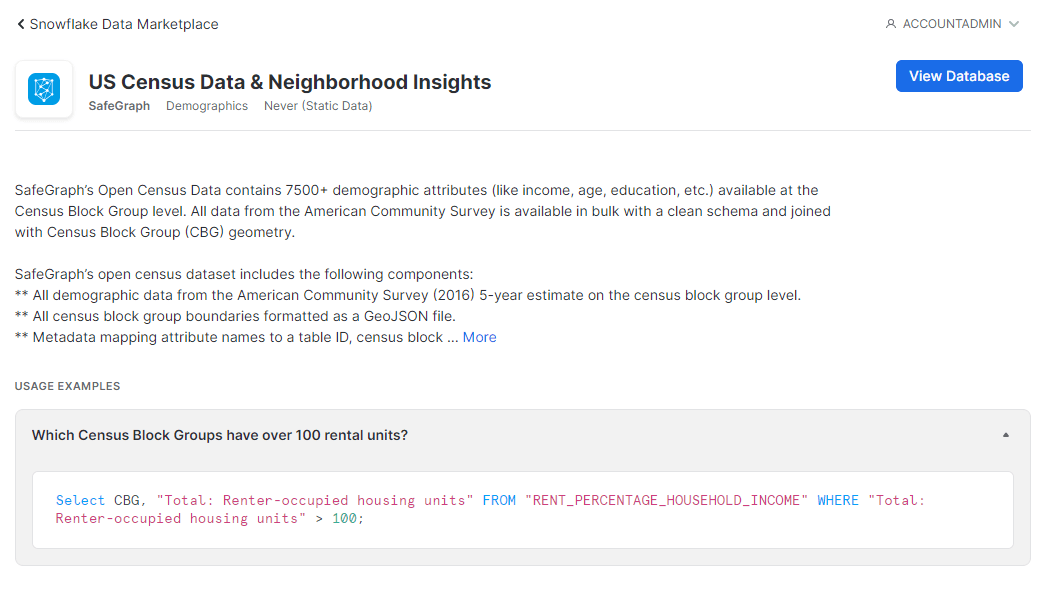

From here, make sure you have selected account admin role and select your listing to subscribe to it. Note that standard listings are available immediately. You may need to set the role to ACCOUNTADMIN to subscribe. Subscribing to a personalized listing requires some interaction with the provider and may or may not have a separate subscription fee. In the image below , we are going to use this data set provided by Safegraph to power some of next steps.

Once you have done that, back in Snowflake you will immediately see all the tables in a new database in Snowflake (you may have to refresh.)

Then in line with best practice, run this line of code to allow for a non accountadmin to use the database. (It is advised that you limit the number of people who use accountadmin role and do not use it as a role for querying data.)

GRANT IMPORTED PRIVILEGES ON DATABASE "SAFEGRAPH_AZURE_WESTEUROPE_SAFEGRAPH_CENSUS_DATA" TO ROLE SYSADMIN

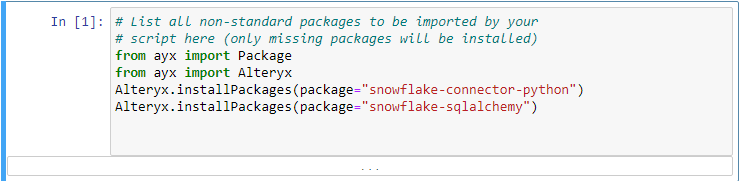

From here, you are ready to query this data, however as we believe in no code/low code, the next post will show you how to connect to this data from Alteryx and the fun will begin!